Running K8s on a Local Machine with K3s

Kubernetes (K8s) is a container orchestration tool used to automate deployments, scaling, and management of containerized applications. Running it in production typically requires cloud infrastructure or a cluster of servers. However, there are tools that help that with virtualization. With K3s and K3d, you can simulate a production-ready Kubernetes environment on your local machine using Docker containers.

What is K3s?

K3s is a lightweight, fully compliant Kubernetes distribution designed for IoT devices, edge computing, and local development. Despite its lightweight nature, K3s supports all the core features of Kubernetes and can run production-grade applications. It is great for developers who want to simulate a Kubernetes cluster on limited resources. You know, a Kubernetes deployment can be very complex.

Even though this is an alternative for the overhad of the fullfledged solution, if you want to know more about the real engineered thing on the wild, here it is: https://github.com/kelseyhightower/kubernetes-the-hard-way

What is Kubernetes (K8s)?

Kubernetes, often referred to as K8s, is an open-source platform for automating the management of containerized applications. It helps you manage containers across multiple hosts, automate deployments, scale applications, and manage rollbacks. Kubernetes is a robust platform used in production environments to handle large-scale, complex workloads, typically on cloud or distributed environments.

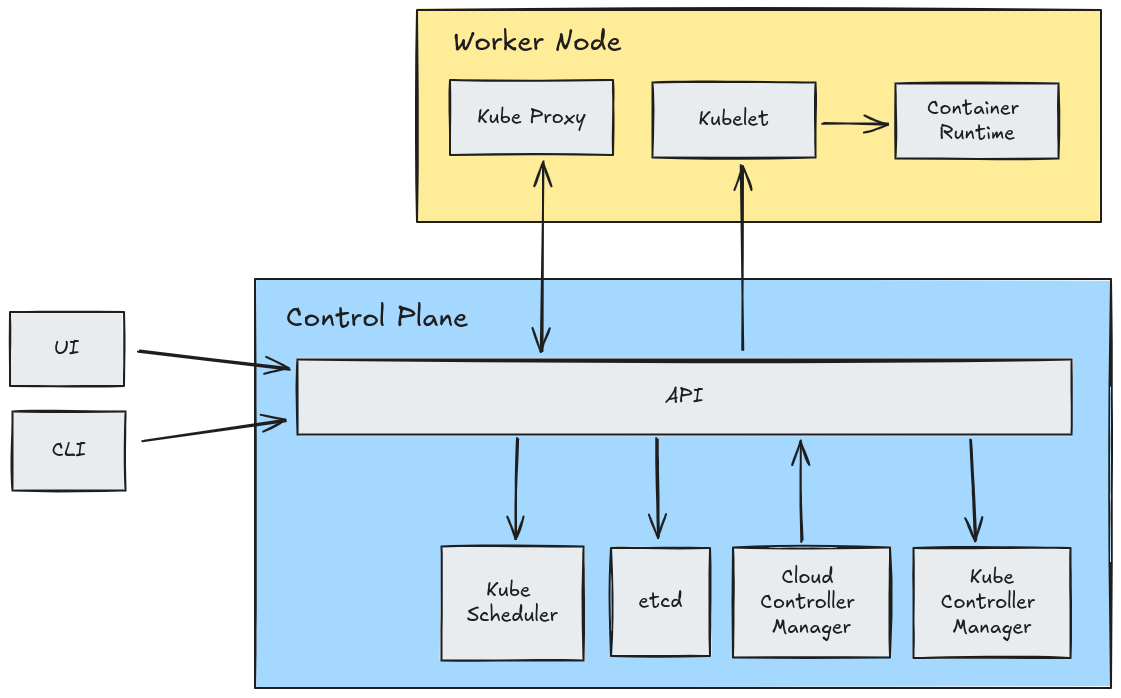

K8s architecture is basically like follows:

Step-by-Step: Running Kubernetes Locally with K3d

K3d is a wrapper around K3s that makes it easier to create and manage Kubernetes clusters in Docker containers. Here’s how to set up a K3d-managed Kubernetes cluster on your local machine.

1. Install Docker

Docker is required to run the K3s containers on your local machine. You can install Docker by following the official guide https://docs.docker.com/get-docker/.

2. Install K3d

After Docker is installed, you can install K3d. K3d provides an easy way to spin up K3s clusters within Docker containers.

To install K3d, run the following command:

curl -s https://raw.githubusercontent.com/rancher/k3d/main/install.sh | bash

3. Create a K3d Cluster

To create a K3d cluster with one server node (master) and two agent nodes (workers), run the following command:

k3d cluster create cluster-1 --servers 1 --agents 2

Notice that "cluster-1" is the name of the cluster.

This command sets up a Kubernetes cluster inside Docker containers, simulating a production environment.

4. Verify the Kubernetes Cluster

After the cluster is created, you can verify that it's running correctly with the following commands:

kubectl get all

# Output:

# NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

# service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 10m

kubectl cluster-info

# Output

# Kubernetes control plane is running at https://0.0.0.0:32915

# CoreDNS is running at https://0.0.0.0:32915/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

# Metrics-server is running at https://0.0.0.0:32915/api/v1/namespaces/kube-system/services/https:metrics-server:https/proxy

kubectl get nodes

# Output

# NAME STATUS ROLES AGE VERSION

# k3d-cluster-1-agent-0 Ready <none> 10m v1.30.4+k3s1

# k3d-cluster-1-agent-1 Ready <none> 10m v1.30.4+k3s1

# k3d-cluster-1-server-0 Ready control-plane,master 10m v1.30.4+k3s1

These commands display information about the cluster and list the nodes running in your environment.

5. Switching Contexts Between Clusters

If you are working with multiple Kubernetes clusters (e.g., local clusters managed by K3d and remote clusters hosted in the cloud), you can switch contexts easily. Kubernetes uses a kubeconfig file to store the configuration for different clusters.

To list your current contexts:

kubectl config get-contexts

To switch between contexts (e.g., from a K3d cluster to a remote one):

kubectl config use-context <context-name>

This allows you to seamlessly switch between managing your local cluster and any remote ones.

6. Deploy a Nginx Service

To deploy a simple Nginx service in your Kubernetes cluster, you can use the declarative approach. In Kubernetes, a declarative approach means that instead of issuing commands imperatively to start services, you define the desired state of your system using YAML or other configuration files, and Kubernetes works to maintain that state.

First, create an Nginx deployment yaml file:

File: nginx-deployment.yml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

Next, create a service to wrap this deployment and load balance its replicas:

File: nginx-service.yml:

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 30080

type: NodePort

Finally, create an Ingress to control the Load Balancer and set how to interact with our services:

File: ingress.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: traefik-ingress

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: nginx.localhost

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-service

port:

number: 80

Note: I chose traefik because that is the default load balancer driver for k3s.

This is the moment to tell your cluter about all this state declared in your ymls:

kubectl apply -f nginx-deployment.yml

kubectl apply -f nginx-service.yml

kubectl apply -f ingress.yml

To verify that the service is running and see the assigned NodePort:

kubectl get services

# NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

# kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 15m

# nginx-service NodePort 10.43.72.114 <none> 80:30080/TCP 15m

Now you can just retrieve the IP of your cluster and visit that page in the port 30080 (remember the NodePort added to our Service?):

get ingress traefik-ingress --namespace default

# NAME CLASS HOSTS ADDRESS PORTS AGE

# traefik-ingress <none> nginx.localhost 172.19.0.2,172.19.0.3,172.19.0.4 80 3m52s

If you grab any addresss in the Address column, and visit it in your browser like "http://172.19.0.4:30080" in the case here, you'll see the nginx homepage.

You can destroy it all using a k3s command:

k3d cluster delete cluster-1

Notice that "cluster-1" is the name of the cluster.

Conclusion

Running a production-ready Kubernetes cluster locally using Docker and K3s (via K3d) offers a lightweight, easy-to-manage alternative to traditional cloud-hosted clusters. You can set up and manage your cluster quickly, verify the configuration, switch between different contexts (local and remote), and deploy services in a declarative manner, which is a best practice for managing modern infrastructure.

This method provides a powerful environment for development, testing, and learning without needing full cloud infrastructure, while allowing you to practice and master the declarative management of Kubernetes services.